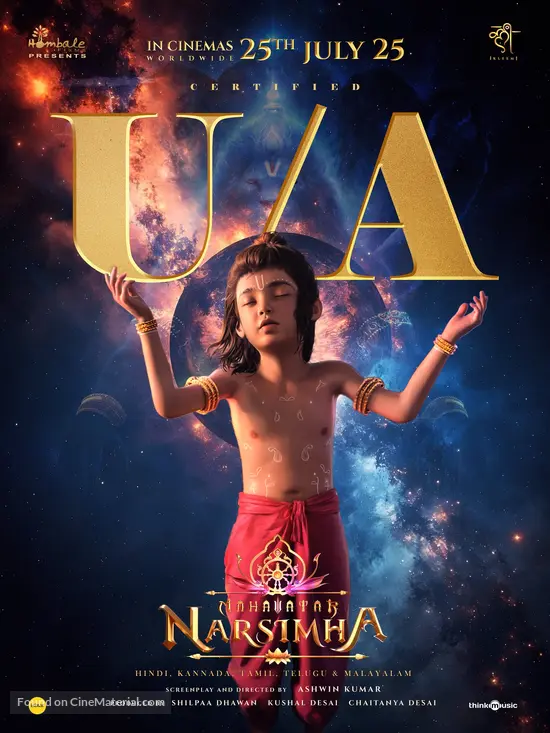

Mumbai [India], July 29: Ashwin Kumar's recent mythological animated film, 'Mahavatar Narsimha', has become an unexpected hit, captivating audiences nationwide.

Swati Bhat

Produced by Hombale Films, 'Mahavatar Narsimha' centers on Lord Narasimha, the half-man, half-lion incarnation of Lord Vishnu. The film has garnered a positive response and performed well at the box office, earning praise from ISKCON. ISKCON Siliguri has reserved an entire cinema for devotees to watch the movie. "We have reserved the entire cinema here for our devotees. Tomorrow, more devotees will come to see the film. Many devotees have gathered for the screening. It conveys a powerful message to people...This film promotes Indian culture and makes it easier for people to understand our philosophy," said Nam Krishna Das to TC, the spokesperson for ISKCON Siliguri.

Earlier, the organization shared on their official social media account that 'Mahavatar Narsimha' serves as a "testimony to the dedication of numerous ISKCON members." They elaborated that these members have worked diligently to help the younger generation engage with spiritual content and understand the culture.

"Audiences across the country have praised the storyline, the VFX and the overall presentation. Watch it with your family and friends and feel the presence of Lord Narasimha!" the post further added. Written and directed by Ashwin Kumar, 'Mahavatar Narsimha' has connected with viewers from all age groups, with positive responses pouring across social media platforms. The makers have also shared updates on the film's performance, revealing that it became the #1 movie at the Indian box office on Monday, July 28.

According to trade analysts of Bollywood, the animation film's Hindi version has collected Rs 14.70 crore within three days of release, thus bringing another addition to Hombale Films' hit slate.